Can You Digitise an Iron Age Roundhouse With Your Phone?

Yes, but there's plenty of ways to improve the results.

A while a go I had the pleasure of paying a visit to Bournemouth University’s Archaeology Department, and meeting some very nice folks who are also interested in 3D and cultural heritage.

One of the people I met was Dr Hayden Scott-Pratt who, among other things, is leading a project to reconstruct an Iron Age roundhouse at Hengistbury Head in Dorset. The project is called Living in the Round.

Hayden is exploring the use cases for 3D digitisation in relation to archaeological work and, as the site of the site of the Living in the Round project is not too far from me, I agreed to pop down for a chat about the topic.

As it turned out, Hayden and a band of volunteers were quite busy doing the actual reconstruction work on the roundhouse when I arrived, so there wasn't much time to talk. The work they were doing was time sensitive as the thatchers were arriving the following week and the roof frame was not yet finished. I didn’t want to hold things up so I agreed to meet Hayden to talk about 3D another time.

As I was there anyway, I thought it might be fun to capture some 3D data—aside from the roundhouse, there are lots of other interesting things at the site one could capture. If I could, I thought I could also try to digitise the thatch-less roundhouse as it was, as a quick record of the building process.

Here are some of the constraints I was working with:

everything was completely unplanned

I only had my smartphone with me (an iPhone 15)

it was raining the whole time

I had a a limited amount of time (~30 mins to capture the roundhouse)

I could only capture images from ground level

As you can imagine I was not hoping to capture research grade data, rather experimenting with simple and easy to use workflows to see what was possible.

While I was waiting…

I had to wait until the volunteers and Hayden took their lunch break before trying to capture the roundhouse so in the meantime I grabbed a couple LiDAR captures using Scaniverse, and a couple of videos that I would process with Luma AI.

Luma AI

Luma AI takes video as its input and converts the data into a NeRF, Gaussian Splat, and surface 3D model. The capture process is very easy: just shoot a video of your subject making sure that all the features you want to reconstruct are visible at some point in the sequence. You then upload your video to Luma AI’s servers where it will be processed into several formats which can be viewed on lumalabs.ai, embedded elsewhere, or downloaded.

I captured a brazier (56 second video), a bit of pottery (48s video), and the roundhouse itself (62s video):

You can view the gaussian splat scenes over on lumalabs.ai: brazier, pottery, roundhouse.

Scaniverse

Scaniverse is one of the earliest 3D scanning apps to appear for LiDAR enabled iPhones and while it does not provide especially high fidelity 3D outputs, it is great for making quick scans on the go. Compared to themore laborious “shoot-move-shoot…” process of capturing a photogrammetry image set, using Scaniverse feels very fluid and fun. The textured surface 3D model outputs can be uploaded directly to Sketchfab from the app, or published on Scaniverse’s own platform.

I grabbed two scans with this app: one of a pottery station and another of a flint knapping station. Each scene took approximately 3 minutes to capture:

You can explore these scenes in 3D on Sketchfab.

Capturing the roundhouse

Once the roundhouse itself was clear of volunteers, I sprang into action and started snapping photos of it with my iPhone. Although it had been raining a little the whole time I was on site, it was only at this point that a proper downpour began—typical! 🌧️

To start with, I began by circling crab-wise around the roundhouse, snapping photos while holding my phone at 3 different heights: arms in the air, chest height, squatting down. After circling once, I moved in a bit closer and took a second round. I then entered the roundhouse and captured some images facing into the centre of the structure.

It was by no means a smooth or easy process:

it was pouring with rain as I took the photos. I could barely see what was on the phone screen and water droplets kept causing false touch events.

I ran out of storage space on my phone before gathering what I would consider a complete image set for the capture.

The roof beams as imaged from within the roundhouse are woefully underexposed due to the phone compensating for me pointing it at the sky.

I didn’t capture every nook and cranny of the roundhouse.

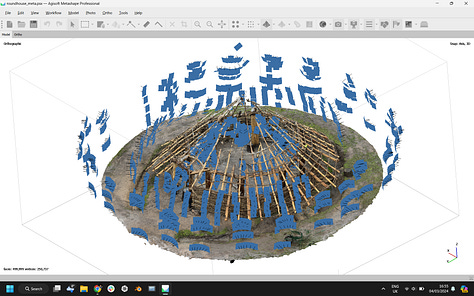

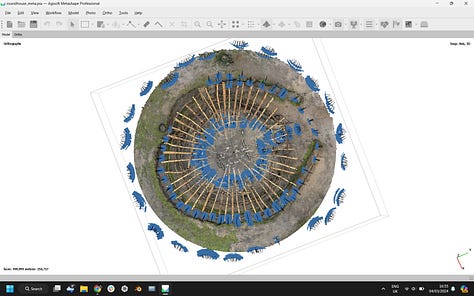

I captured 1070 JPG images in approximately 30 minutes with the default iPhone camera on full auto before the volunteers returned to their work. You can get an idea of the camera positions from the screenshots below with each blue rectangle representing the capture location of an individual photo:

Reconstructing the Roundhouse in 3D

I knew my dataset was not ideal and the time available for me to work on this project is limited, so my plan was always to just throw my images at some photogrammetry software and see what happened. I didn’t pre-process my images in any way (adjusting exposure etc.) and I didn’t mask the subject from the background either (e.g. to ignore the sky).

Here’s how a few pieces of software performed:

Polycam

Polycam is a cloud based service used to produce surface 3D models, gaussian splats, 360 images, floorplans and more. I had high hopes that Polycam would be able to produce a decent gaussian splat, but alas the processing failed multiple times 🥲. Polycam was also unable to align the entire image set and only produced a partial 3D mesh, but it is quite cool to be able to capture a 360 background image for the scene all in the same app:

As I like the simplicity of using a cloud based service, I also thought I’d try a couple of other apps that I haven’t used before or used in a while 👇

Pixpro

I’d never really heard of Pixpro being used in the context of cultural heritage before, but I thought I’d give it a go as they offer a free 14 day trial ($600/yr thereafter). Their desktop app can be used to process data locally, but can also be used to upload image sets for cloud based processing which is what I did in this case.

Despite having never used the app before I found the experience pretty intuitive and the 3D outputs were not bad at all. Pixpro aligned pretty much all the images and there was a neat geo-located preview of my image set, drawn from the image EXIF data:

The mesh wasn’t too bad either:

You can explore the output mesh model in 3D on Sketchfab.

Autodesk Recap Photo

There was a time when I really enjoyed using Autodesk photogrammetry software, way back in the time of 123D Catch, Memento, and Remake. I figured I should have a peep and try the latest incarnation of their photo reconstruction tech which comes as part of Autodesk Recap, and grabbed a free one month trial (£390/year thereafter).

First off installing the app was a pain because to run Recap, you also need to install Autodesk’s Connect app, and sign up to Autodesk Drive as well which took some time. The joke was on me anyway, as even if you have a free trial of Recap, you need a paid subscription to Drive to upload your photos so I was unable to run my images through this software. Autodesk even has a help centre doc which essentially states “if you want a free trial, you need to buy a paid subscription“🤷

Oh, well—onwards to my tried and tested local processing options! 👇

RealityCapture

RealityCapture is a market leader in desktop photogrammetry and LiDAR data processing, and often one of my go to apps for processing an image set. I used RealityCapture to process my 1000+ image set of NHM London’s Hintze Hall, so thought it would be ideal in this case, too

The software was able to align several subgroups of images (e.g. groups of 50 or 500 images) into separate components but I couldn’t easily get it to produce a unified 3D model combining all the images. No doubt if I had the time to add in a good few control points manually (e.g. give the software some clues as to how to stitch the sub-components together) I could produce a more complete scene, but I just don’t have time at the moment.

Metashape

Metashape is another market leader in the desktop photogrammetry / LiDAR processing space, and managed to aligned all my images 🎉 and I was able to go on an produce a pointcloud and meshed model. The whole process took a good few hours on my laptop, and the output surface 3D model (as expected) shows quite a bit of noise especially around the apex of the roundhouse roof. All things considered though, a decent output.

In the past I have used Metashape to align images, then exported a set of undistorted images and camera positions as a bundle.out file and then processed these using RealityCapture which generally processes data faster, and sometimes produces better 3D. However, Agisoft removed the easy to use ‘Undistort Images’ button a while ago and while it is still possible do this with a workaround, I didn’t have the time to try it out this time

You can explore the output pointcloud and mesh model in 3D on Sketchfab.

Edit: Bonus Gaussian Splat

As I was putting together the latest edition of The Spatial Heritage Review I discovered—thanks to this post by Studio Duckbill—an app called Postshot by Jawset which is currently in beta testing. It’s an app for local NeRF training and gaussian splat processing.

After Polycam and Luma AI failing to process my 1000+ image set into a splat, I wanted to have one last go at it. Lo and behold, Postshot created a really nice splat after about 3 hours of processing:

I was able to export the splat as a .PLY file.

I’m still not sure how to publish a 3D splat online without hosting it oneself, but I did use Playcanvas’ Supersplat to edit the resulting splat, mostly to remove the sky and background, as well as orient the splat correctly. Supersplat also lets me convert the PLY file into a .SPLAT file as well as a compressed PLY:

In Conclusion

So, can you digitise an iron age roundhouse with your phone? Yes, I think so!

Did I do a good job of it? No, not really.

To improve my process and outputs if I tried this again, I think I would:

visit on a dry day 😅

spend some time planning my captures

bring a telescopic camera pole to capture the roundhouse from above

empty my camera storage, shoot images in RAW

spend more time preparing my input image data before processing

try and capture a more complete gaussian splat with luma ai

Update: Thatching

I popped back for the rest of my chat with Hayden and his colleague Mark yesterday. It was much sunnier which was great for the folks from the Ancient Technology Centre and volunteers who were beginning to thatch the roundhouse.

I grabbed another quick video (120 seconds long), and processed it with Postshot using 1000 out of the available 4000 frames. Here’s the result cleaned up a bit in and viewed in Supersplat: