Augmenting a UNESCO World Heritage Site with 3D

A spatial heritage project for the Saudi Arabia Ministry of Culture and ArcheoConsultant.

Working for ArcheoConsultant, I was invited by the Saudi Arabia Ministry of Culture’s Jeddah Historic District to capture a number of archaeological finds from the region in 3D, and to produce an experimental augmented reality experience. I’m glad to share a look into my workflow and some of the results!

Al-Balad: Jeddah Historic District (JHD)

Jeddah's history can be traced back to the 7th century AD, when it became the port of Mecca. This role transformed Jeddah into a multicultural hub for trade and pilgrimage. Over centuries, it was controlled by various powers including the Abbasids and Mamluks. After joining Saudi Arabia in 1925, Jeddah underwent rapid modernization, evolving into a major economic and logistical centre, while its historic district, Al-Balad, remains a UNESCO World Heritage site.

Jeddah Historic District (JHD) was established in 2019 specifically to safe keep the heritage of Al-Balad. The many regeneration projects currently underway in the areas are therefore accompanied by rigorous archaeological surveys to document and preserve the underlying historic record.

A small portion of finds collected from JHD are on temporary display at Nassif House, a cultural centre based in a historic house that was constructed in the late 1800s for Omar Nassif Effendi, a member of a wealthy merchant family and, governor of Jeddah at the time. Jeddah Historic District wanted to explore using technology to expand access to the objects and promote the exhibition, which is where ArchaeoConsultant and I came in, collaborating to digitise in 3D and publish some easy-access augmented reality.

Digitisation

My brief for this part of the project was to conduct high resolution photogrammetric capture of each subject, and to prepare optimised copies of each 3D model for display in augmented reality.

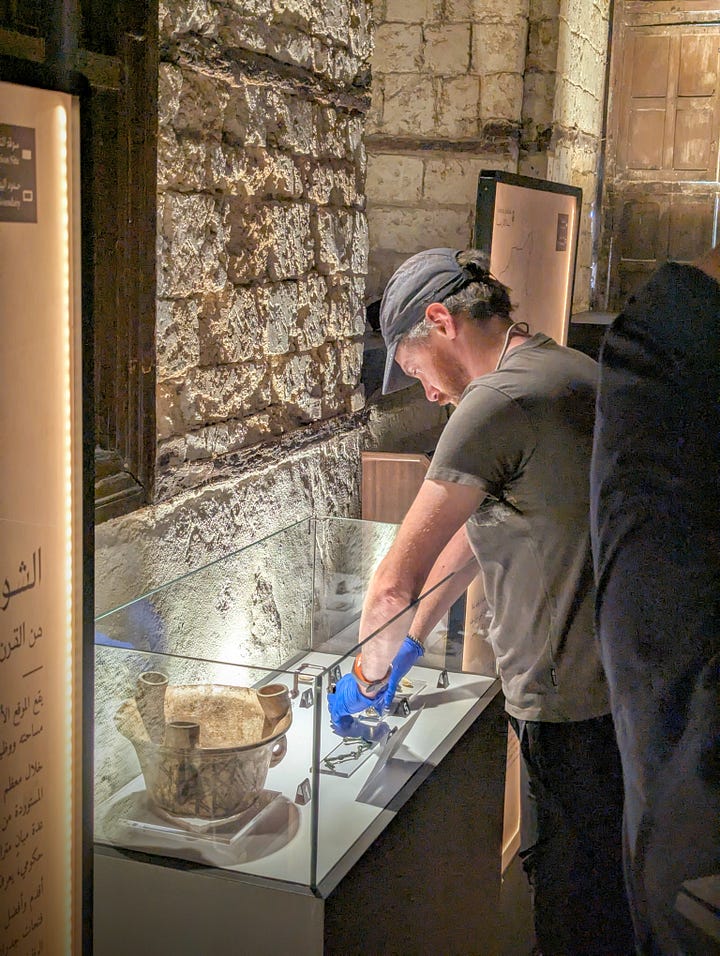

Over the course of an intense week, I worked with ArchaeoConsultant’s Field Director Alistair Dickey to take objects off display one at a time (so that the majority still remained on public display) and capture them in my moveable photogrammetry studio in the JHD offices.

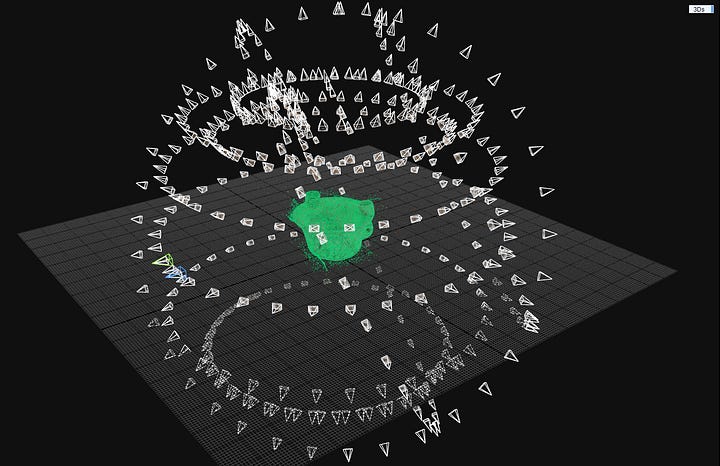

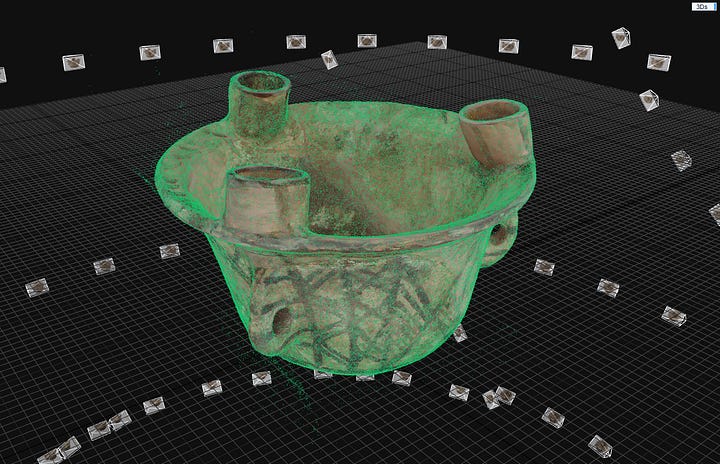

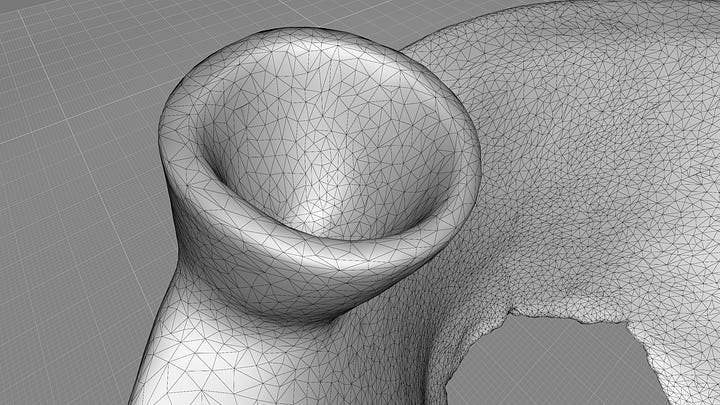

Between 200 and 500 images were captured per subject depending on the object’s complexity and each image set was processed in RealityCapture (now RealityScan). I used the software’s quality analysis tool to check I had good coverage of the subject before it was returned to the exhibition and put back on display.

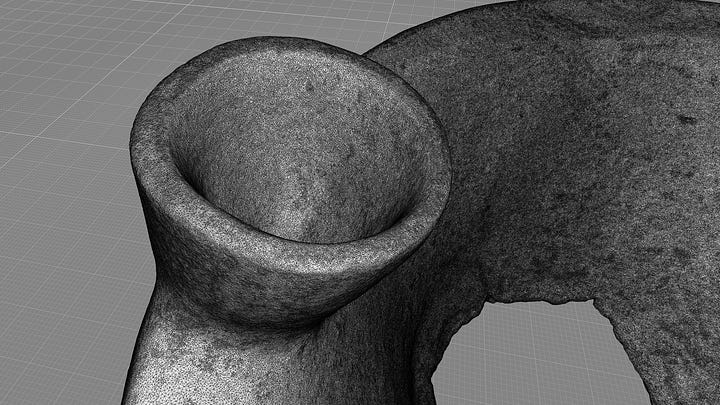

For each model, I exported both a high resolution (~5 million face) and low resolution (50,000 face) 3D model which, along with a super optimised version (see below) and the input image set formed the 3D data deliverables for this part of the project.

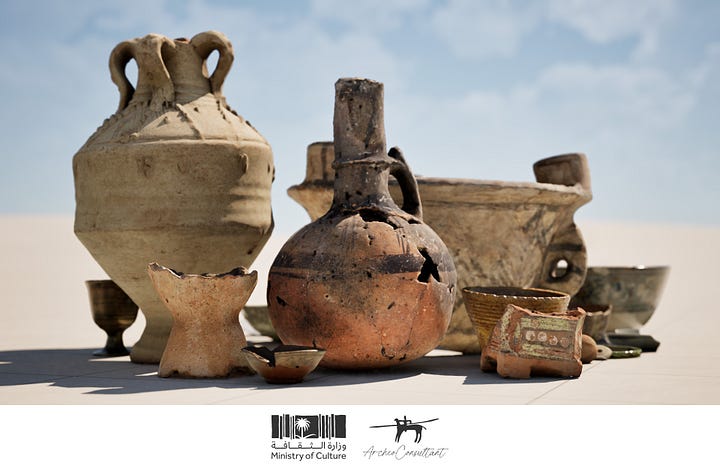

The subjects ranged in size from fairly large pottery up to 40cm across (like the incense burner pictured above), all the way down to a tiny bone button; materials included glazed and unglazed ceramics, glass, and metal…

Sidenote: Layout & Rendering

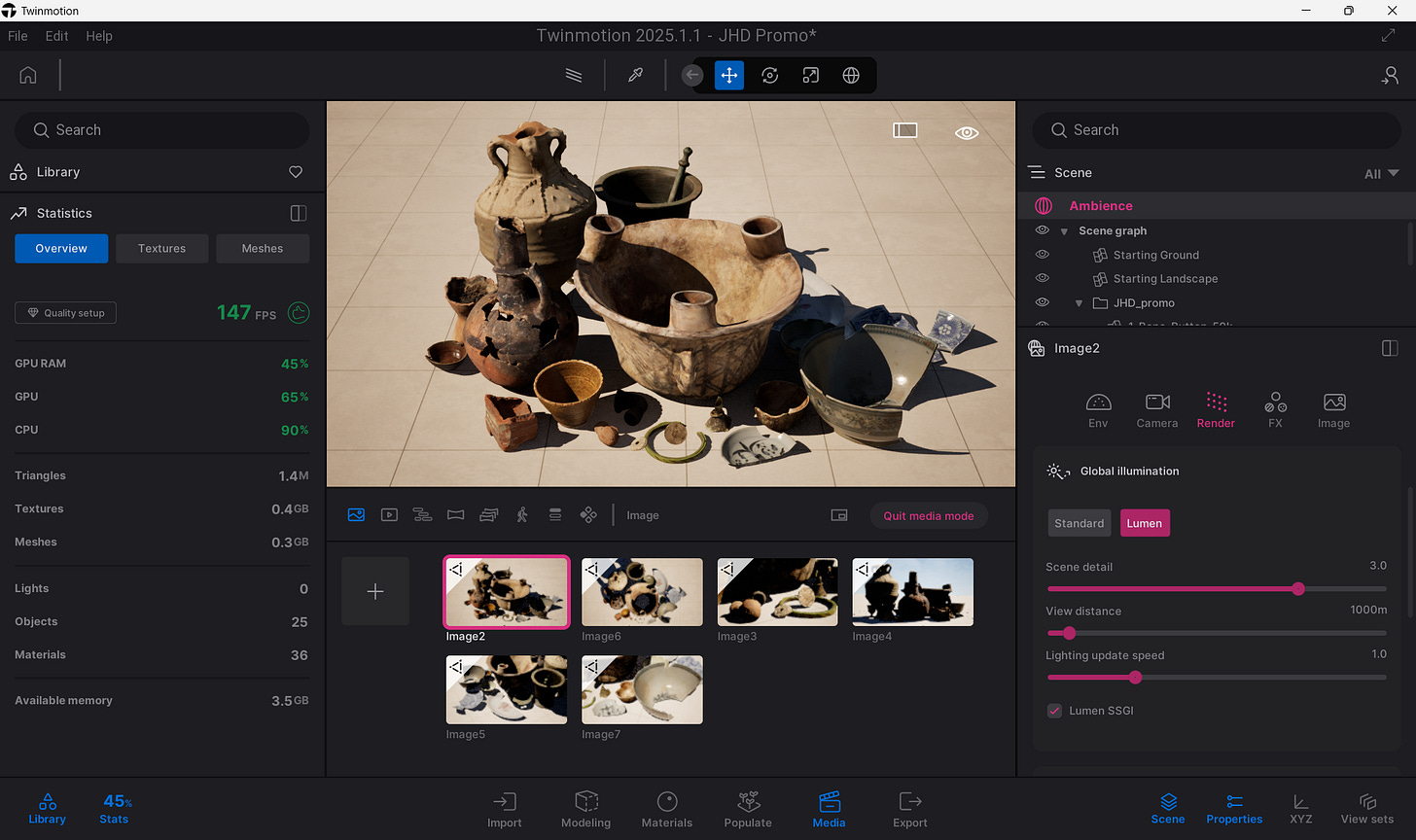

I used Blender to create a layout of the 3D models in a group and to export them into a single GLB file. I’ve use blender a lot over the years and find that, once you learn a few short cuts, you can quickly and easily position, scale, rotate, and align scenes like this.

I then loaded the GLB from Blender into Twinmotion, tweaking some material settings, and adjusting lighting, camera settings (field of view, depth of field, position and motion paths, etc.), and added a little post processing until I was happy with the results.

Optimisation for Augmented Reality

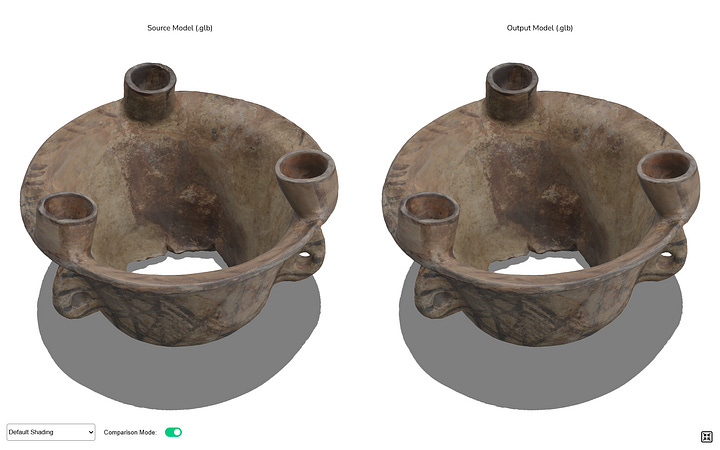

While I could use RealityCapture to directly output an AR-ready 3D model, I prefer to use the excellent RapidPipeline (RP) to do it for me. I simply upload my high resolution asset, select a 3D processing preset (tweaking it a little if necessary) and RP runs in the cloud optimising face counts, texture formats and count, mesh compression, and more.

For this project I used the ‘AR Core’ preset, adjusting it to simplify the mesh to 25,000 faces, a maximum file size of 3mb, and to output both GLB and USDZ formats.

This preset was able to crush my high resolution model down from 4 997 620 faces to just 25 000, a file size saving from 675.25 MB to 1.57 MB(!). While some compromises to visual quality are necessary for compressing a huge 3D file in such a way, the optimised output 3D is still visually recognisable and—more importantly—will load a lot, lot faster via a mobile web experience and perform much better across a broader spectrum of devices.

Augmented Reality with Adobe Aero

For the AR experience, JHD wanted end users to be able to:

Open an image tracked version of each subject in AR.

Android & iOS compatible.

No download necessary.

Basic interactions.

Based on this criteria, and the short term nature of this experimental project, we chose to use the free Aero app from Adobe to deliver this part of the project. I had previously used Aero to create some AR business cards, and it’s intuitive interface and reliable delivery was perfect for this project.

Testing tap interactions in Adobe Aero Beta desktop app: the 3D model is anchored to the QR code and as a user taps the mesh, the model rotates in increments of 90° to enable full exploration from every angle:

You can see some early demos of the simple interactivity in this next video, unfortunately I’d have to be back in Saudi Arabia to show you how the project was implemented in Al-Balad 😄

Bonus: The whole scene also works well in AR, too:

In Summary

I hope this gives you an idea of what is involved in the workflow of a project like this, and what you can create using different tools, some of which are free to use.

The one thing I think I would change if this project were to be designed for use over a longer period of time would be to use a service like Zappar, 8th Wall, or MyWebAR to host the AR experiences.

It was an amazing experience to visit a part of Saudi Arabia, if only for a short time, and I am grateful to ArcheoConsultant, Jeddah Historic District, and the Saudi Arabia Ministry of Culture for the opportunity to work with such wonderful historic artifacts.

I appreciate you posting your process here! I took a course of digitization techniques but was only able to work on photogrammetry for about 3 weeks. I wish we had access to the caliber of software you've used—the results are fantastic!

This looks like an amazing project? Do you have any of the usdz files available for download?